Chris Mustazza

Ph.D. student, English Literature

Ph.D. student, English Literature

Assistant:

Zoe Stoller

CAS '18 (English & Creative Writing)

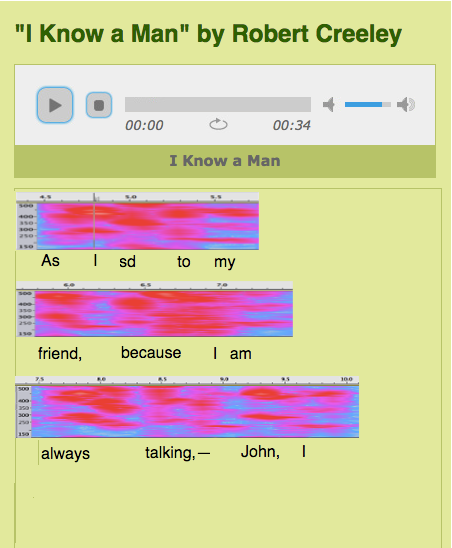

Machine-Aided Close Listening (MACL) is a reading-listening methodology meant to apprehend the materiality of poetic performance alongside the text of a poem. Rather than considering poetic sound recordings as recitations, which are subordinated to the primacy of the printed word, MACL seeks to create the space to discuss the sonic materials of poems-timing, tempo, pitch, duration of the utterance-as parallel to visual form-lineation, spacing, typography. In pursuit of this new methodology, one that allows for an examination of how sound can be illustrative or contrapuntal to the poems' visual poetics-I have worked to develop several applications that foreground different aspects of poetic performance.

The first MACL app, completed last year, examined pitch and loudness in a poem by Robert Creeley. This app was the basis for my forthcoming Digital Humanities Quarterly essay on Machine-Aided Close Listening, and an invited lecture at the Max Planck Institute for Empirical Aesthetics. Over the summer, the Price Lab provided the funding for an undergraduate fellow, Zoe Stoller, who worked with me to extend the MACL app to show the tempo of each performance at the line-level, in words-per-minute, and apply this new visualization to a Robert Frost poem. This work formed the basis for invited talks at the Robert Frost Society and Concordia University in Montreal.

This academic year, the Price Lab continued its funding of an RA position for me, which will conclude with this term. We have built an all new MACL app, one that compares multiple performances of the same poem to look for commonalities and differences. The aim is to see how the kind of venue where poems are performed may shape the performance, and also to identify aural syntactical units that may resist legibility in the printed versions of the poems and exist outside of the sound implied by punctuation. This work will be presented for the first time at the Price Lab seminar series, and then at an invited lecture at the Maryland Institute for Technology in the Humanities (MITH) in April. It will also figure into the pedagogy of a course I am teaching in the fall, called "Poetry Audio Lab: Poetic Modernism & Sound Studies." At the end of Spring 2018, the project will conclude, and I will think about whether to apply for additional support next year.